Condolences

Is this calculus for babies? Find the goo goo of this gaga gradient

It's perfectly valid to take calc 2 three years ago

Its so funny to look at that and realize i used to struggle about it / post it

One thing i realized is that regardless of how difficult i feel a problem is at the moment is, in three years i will see it as for babies

I had this misconception going into calc that it was like the end of the road or like some math final boss. I was wholly unaware that it is essentially the first step for any stem discipline

It's the pacing of a college semester that makes things like that. Months long sprints from concept to concept. It takes time for things to settle into your mind palace

Imagine asking for help here when AI exists. Am I still living in 2010s?

Go ahead and solve that with AI. Let me know how that works out for you.

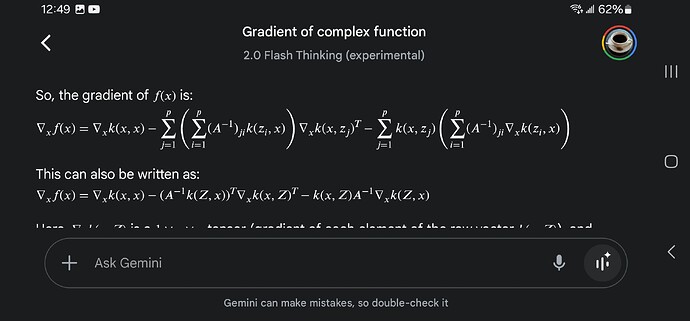

Bro AI can literally do almost everything now. It is improving so rapidly it's terrifying hahaha. I remember 2 years ago they couldn't even make code that compiled consistently and just threw in bullshit functions that don't exist so I wrote it off but now I can literally just plug and play a ton of the code and so many other things like dumping spreadsheets, pictures, graphs, w2 forms and taxes... Just fucking crazy man. I'll plug it in right now to gemini deep think and get back to you in a sec.

It's like just here is all the raw data, do this work for me and do it perfectly because you know exactly what I mean because you understand me perfectly. Like a super assistant.

Answer looks like bullshit so I don't think it was written correctly by the OP.. let me double check

Fuck no latex support BRB.

Just show me the actual text of the problem. Here's what the AI wrote but after looking at the problem I want to know what k(x, x) is like how can you have a multi variable function notation when there is only 1variable????

Ask Google Gemini why I would want to avoid a matrix inversion.

Here's the answer to my question from a month ago: I need to compute a low rank approximation (either nystrom or randNLA) of A and then outright compute A^-1. and then I cold hold A^-1 constant during my optimization of x. I can't find the matrix vector product Ax instead with CGM.

AI must really feel like magic if you're too stupid to know if the stuff it spits out is correct or not